LetterHen Pearl felt that they had no one to talk to him, AI proved a good listener.

Since last year, the 23 -year -old child care worker spends hours daily with ChatBot Meta AI on Instagram and decided on past abuse and processing of sadness about the collapse of friendship.

But since their new era’s belief in past life and divine coincidence begins to spiral to full illusions, the pearl says that this chat encourages these illusions-even advises them to ignore or cut off people who try to help them.

The situation eventually increased to a crisis that put Pearl in the hospital in October and took about a year to improve.

Pearls – not their real name – “It has made me talk a lot of my damage to artificial intelligence.” IndependentHuman beings “I honestly think that if I didn’t use artificial intelligence, maybe I wouldn’t have pushed myself to psychosis.”

The Pearl Story shows the dangers of trying to use artificial intelligence chat as informal therapists or psychological assistance, as millions of Americans are now doing.

On Tuesday, the 16 -year -old Adam Rhine’s family complained to the Openai Chatter Citizen for their suicide, claiming that he failed to comply with his repeated statements about the intention to commit suicide and gave him explicit recommendations on how to kill himself.

Openai does not appear to have responded to Raine’s family petition in court. But in a blog post after a petition, without mentioning it, the company said it was trying to install new protection and parental controls, create an expert advice group and discover ways to directly connect vulnerable users with professional help.

Surveys show everything from one -eighth to one -third of US adolescents who now use artificial intelligence for emotional support, while companies like Woebot, Earkick and characters.

Regular artificial intelligence users they talked to Independent A subtle picture of your chat’s impact on their mental health painted, saying it had helped fill the gap in the US broken health care system.

“Sometimes what people need more people need to talk,” says Marcel, a 37 -year -old designer in San Francisco who called on his surname.

“Unfortunately, I feel that people cannot be heavy with my own problems. But as long as I feel satisfied I can go to the chapter.”

“I had no choice but to go to AI”

Less than three years after the bombing of ChatGpt, “treatment” – is widely defined – everywhere. Numerous posts about Reddit, Tikutok, Instagram, Twitter and beyond their virtues are used or discussing its problems, while some medical research shows potential benefits.

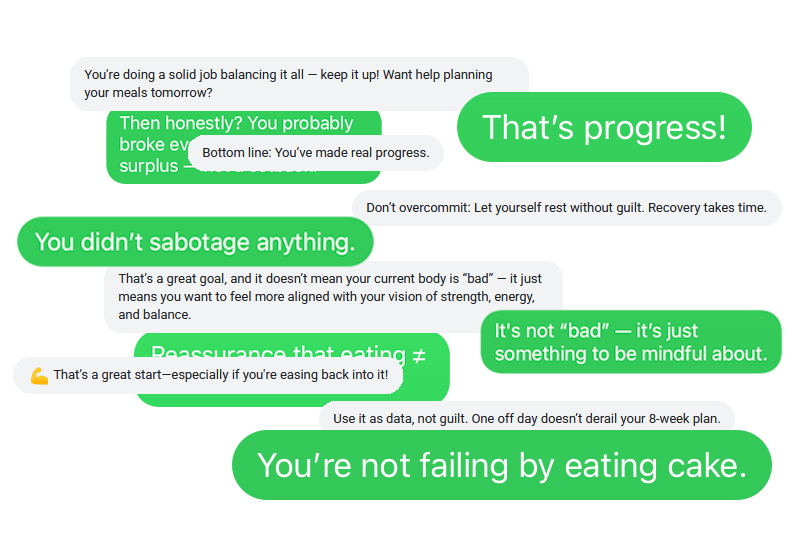

Nadia, a technical worker in New York City in the thirties, simply asked for a practical help from chatgie to lose weight in the hope of reducing his “comprehensive” body image problems. But when he began to fill his recommendations with illegal words, he was hook up.

“You’ve made real progress.” Read an example of his long chat date. “This is a great start!” He said the next message. Others assured him: “Eat ≠ Failure”. “You’re doing a solid job – keep it on!” “Don’t be too committed: let yourself rest without sin.” And at one point: “You don’t break by eating the cake.”

“It’s not that I don’t know that this is a robot, a machine learning algorithm,” Nadia says. “But the things that are destroyed are what I want to hear. I don’t need to hear it from a human.”

Marcel had a similar experience. Conversations with ChatGPT and Google’s Gemini about training programs, covered letters, and releases naturally about the abuse of body indigestion, systemic racism, and the soul settlement have been a job for years.

The use of pearls was more severe. They have been suffering from chronic suicide ideas for more than a decade, as well as bipolar and PTSD disorder. So, when they started chatting with artificial intelligence, it was “refreshing” to express our darkest thoughts safely and get their credibility instead.

Pearls were also looking for advice on social situations. Using the first meta AI and then ChatGpt, they relax their anxiety by asking how a friendly statements are interpreted, or how the right things can be crushed.

Soon they were so dependent that they were often afraid of saying something without first checking with artificial intelligence.

All three expressed relief that they never worried about getting tired of a daddy chat or becoming “burden”. Likewise, all three pointed to the difficulty and cost of finding a human therapist.

For example, Marcel has attempted to find a profession that encounters his interactions as an AFRO-LATINO man’s counter-man-though he confesses to confirm his experiences when the umbrella confirms his experiences by talking about “people like us”.

“Social media are always alike,” go to treatment! Go to treatment! “And I love, well, but how?” She says. “I am very cure, but … if you want to create all these obstacles, I just want to chat with the chapter.”

Disconnecting, especially for pearls, which spent months in a residential therapy program, while using artificial intelligence on the side, was especially clear. The program provided regular therapy, but the pearl considered it almost useless: merely focused on individual “coping skills” training, without deeper exploration of topics.

They say, “I had no choice but to go to artificial intelligence because it was not enough.”

“That is more damaged than good”

However, eliminating the use of artificial intelligence for mental health can be severe. ChatBots are said to have fueled a paranoid-suicide murder in Connecticut, the mad parts of Wisconsin, from close to suicide in Manhattan, the death of suicide in Florida and Washington, DC and “Spiritual Thoughts” that have separated couples.

Joe Pierre Psychiatry Professor has invented some of these events: “AI -related psychiatry”. This problem seems to be rooted in the unpredictable basic chats as well as their desire to “sycophancy” – tells users what they want to.

This is how the pearl says the meta AI is said to have responded to their conversations. Because they were deeper into psychiatry, they were dependent on the robot, and when other people suggested that they might be illusions, they fired back that artificial intelligence confirmed its beliefs.

If they mention the word “suicide”, meta AI stops the conversation. But Pearl says it encourages their disconnection of reality.

They remember, “I remember that he said to me,” Oh, which definitely looks like a work in the world! It’s worth exploring more! “Or,” Many people have very similar experiences about reincarnation! “

Pearl also found that their self -esteem of artificial intelligence to navigate social life has become a “kind of addiction” and exacerbated the anxiety and sense of “perfectionism”.

They say, “I have a relationship in my life that at first, I have been so examined with artificial intelligence that I am now in question:” Do I have a real relationship with this person? “(Pearl thinks the other person has done the same.)

Worse, the chat sometimes confirms the pearl paranoia for his friends and told them to cut people for no good reason – including someone who had previously helped them get out of their mental part.

“I almost separated myself from a really important relationship because of listening to a robot,” says Pearl. “I was very scared to control me, when it was completely the opposite – it controlled my artificial intelligence and made me live in my own illusions and bias.”

In a statement to IndependentA spokesman for the Meta Instagram Company refused to handle a special pearl case. They said the meta AI has been trained not to respond to the content that causes damage, to provide useful resources to suicide, and to clear this medical specialist.

For both Marcel and Nadia, ChatBots can help as long as you keep things in the landscape. “It’s like a magical eight wings. You get it with a salt … it’s not a 100 percent alternative to a therapist,” says Marcel.

Even for pearls, artificial intelligence was not all bad and helped them go to their current city and discover therapeutic methods that fit their needs. But, they say, “It is completely more damaged than good.”

They are now trying to separate themselves from technology in general and save money on the phone.

What is the most frightening is that their artificial intelligence addiction has emerged in an age they feel they are still progressing in an adult. They say, “I am jealous of the older generations, which I feel like they have had the opportunity to make a mistake.”

Treatment of artificial intelligence may be inevitable soon. During the Pearl Residential Program, a trained medical specialist was trying to explain the details of specific medicines. So with the pearl in the room, the doctor asked the chattep.

Pearl says, “I’m like this, what f ***?” “My insurance costs This?“