LetterThe heir’s mouth moves in a timely manner. Their eyes are locked with you and follow you as they speak. While a minor smile blinks you, the pale is formed next to their mouths.

You can’t be sure that you are not looking for a converter.

There was a time when you have no reason to doubt that someone at the end of a video call was who it looks like. It wasn’t really long ago. But changing the face, a rapidly developing application of artificial intelligence technology, has questioned this trust measurement.

Observers have warned that, as once, raw face replicas become more difficult to identify fake, an opportunity for criminals to use in fraud designs is growing.

David Monkery, head of the Sentilink Scalp and Professor of State University of Georgia, follows the movements of scam bands on social media and messaging systems such as telegram. As fraudsters, he was seriously using facial replacement technology and spread the word on how to use it.

“The fraudsters often operate in a group, allowing them to share knowledge of new technologies, to share advice on improving their tactics and even the business or details of the victims,” David says. “Part of their communication includes leafing about their fraudulent activities, which often share live fraudulent films to showcase their skills and gain their credibility in their circles.”

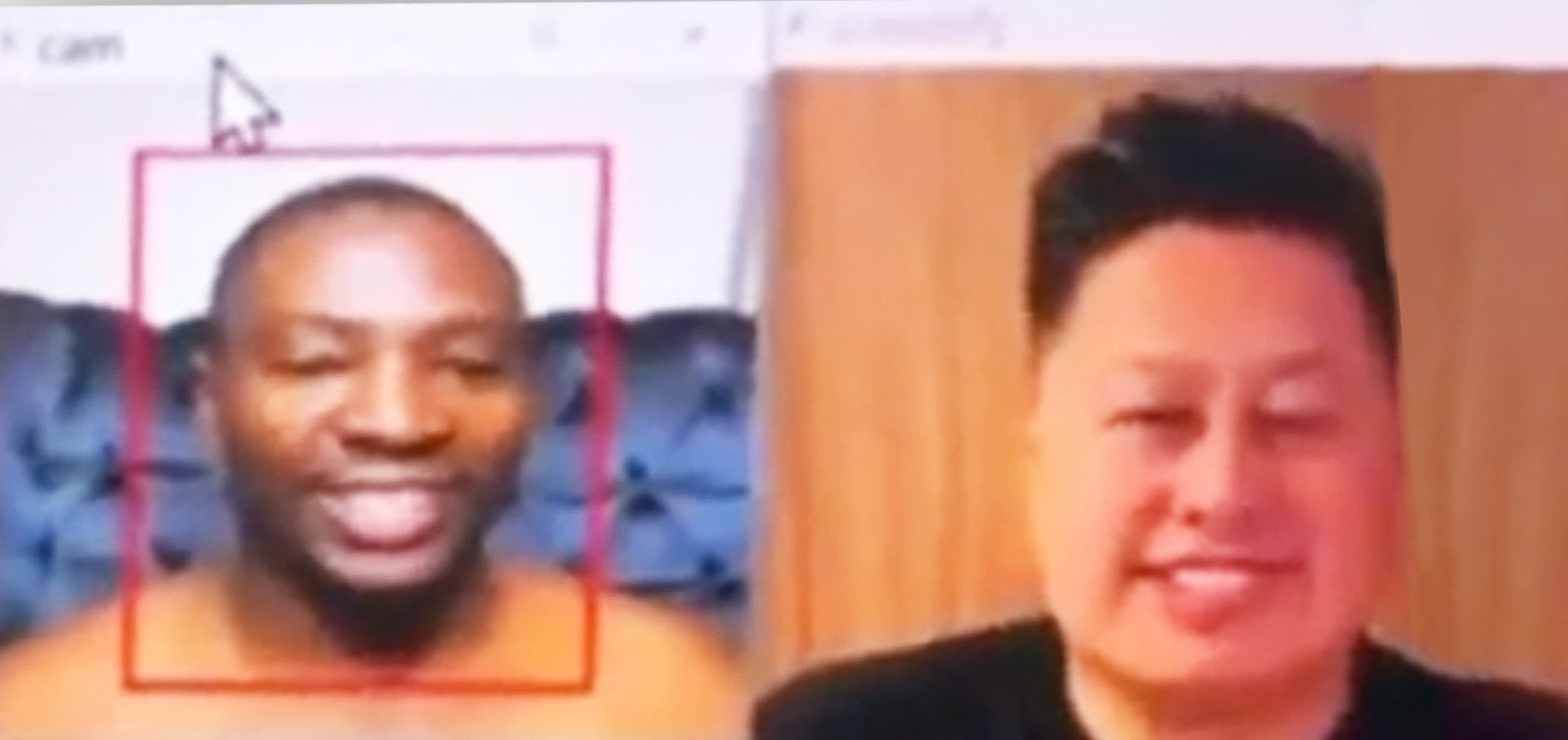

The video that David has drawn from one of these groups shows a man who shows his use of face deformation technology to appear to be a potential victim in what is known as a romantic scam. In this clip, a man is seen with a woman. His true face is shown alongside a completely unmistakable face that immediately makes his movements mirror. It seems that this woman, who can only see the changed face, is happily involved in the conversation, and no sign of her suspicion talks to a man with an incredible mask.

Have you been aimed at scam with high technology? In the [email protected] in contact

“Face deformation technology and online transfer have improved dramatically over the past year and a half,” says David. “A few years ago, face change was possible, but real -time face broadcasting during a live conversation posed important challenges. Today, countless software can support live face replacement,” he said.

Romantic fraud is a trap that deceives lonely hearts. A fraudulent sacrifice with love and promise of love. Then they stole their money. Sometimes they cut off after this relationship, though they may have months or even years.

In a recent outstanding example, a 700,000 -pound woman pays more to a fraudster who used Deepfake photos to pretend to be Brad Pitt. The fraudster convinced the divorced woman that the Hollywood star had crashed in a difficult time and desperately needed money to afford her medical costs.

It is difficult to believe that everyone is deceived by such a reason. However, the sad reality is that there was a person who has the will and sales of that scenario to a suspect – and their belongings were rapidly progressing.

The capacity to change the face to deceive is reinforced by sound change technology. Every major dating program offers safety advice to users, which constantly shows that phone or video call can be used to display potential dates. But they do not warn that any of these communication lines can be used to hide the caller’s identity.

“A combination of facial and voice -moving technologies makes online fraud for simple agents and makes it more challenging for targets for targets,” says David.

The latest research shows that Deepfake Voice technology is now in use. Last year in the UK, a call protection company, 26 % of consumers received a deep voice call. The lost amounts in these calls are remarkable. An average of £ 13342 reported – more than 10 times the average for fraud.

This technology is not limited to romantic fraud. This is used in investment scam and a range of online frauds that target individuals as well as companies.

“We are seeing this technology to unlock new bank accounts, identity CEOs to deceive employees to send money to fake sellers and make extortion scams,” David says.

A 2024 poll by Regula Forensic Medicine showed that 49 % of companies were targeted by both voice and video Deepfakes, respectively, 37 % and 29 % in 2022, respectively, Ferrari was the subject of fraud that accurately imitated a fraudster from the Southern Italian dialect.

The fraud, as a fugitive employee who contacted, was suspicious of a question about the urgent deal, asked a question about the recent book they borrowed to their boss. Months ago, a fraudster with a Hong Kong -based employee at the British Engineering Company had a better chance of being fooled by a video call for a £ 20million transfer to criminals.

The money taken in such frauds is mostly transferred to an international criminal company, although gangs based primarily in West Africa and Southeast Asia receive money from a country such as the UK and transfer it to an organized crime network to fund other illegal activities.

“Scam and fraud are inherently related to organized criminal gangs,” said Simon Miller, director of politics, strategy and communications at the CIFAS, leading the British fraud.

“It is estimated that 72 to 73 percent of all frauds are involved in an actor outside of England.”

Earlier this month, researchers in Nigeria said they had linked evidence to the infamous “Yahoo boy” fraudsters to kidnapping, ritual killings and weapons trafficking. “Yahoo Boys” derive their name from the email service they used in the early days of their online fraud.

This is a name that is very beautiful by the development of technology, but it is a lasting challenge that frauds are raising: they change with time.

And time is rapidly changing.